GCS101 Access Google Storage Buckets Programmatically

We need to obtain a key file for a GCP service account to let us authenticate and access GCP resources in the project from external code including Google Colab notebooks. In this post, I summarized the process to allow you to download files from Google Storage (GCS) programmatically. Let’s start with how to access GCS anonymously.

Access public Google storage bucket anonymously

Use this template to perform the operation:

def download_public_file(bucket_name, source_blob_name, destination_file_name):

# bucket_name = "your-bucket-name"

# source_blob_name = "storage-object-name"

# destination_file_name = "local/path/to/file"

storage_client = storage.Client.create_anonymous_client()

bucket = storage_client.bucket(bucket_name)

blob = bucket.blob(source_blob_name)

blob.download_to_filename(destination_file_name)

print("Downloaded public blob {} from bucket {} to {}." \

.format(source_blob_name, bucket.name, destination_file_name)

)For example we would like to download the training dataset and the test dataset of Kaggle competition Tabular Playground Series - Mar 2021 from public URLs:

bucket_name = 'gcs-station-168'

# training dataset (public URL: https://storage.googleapis.com/gcs-station-168/train.tfrecords)

source_blob_name = 'train.tfrecords'

destination_file_name = './train.tfrecords'

download_public_file(bucket_name, source_blob_name, destination_file_name)

# test dataset (public URL: https://storage.googleapis.com/gcs-station-168/test.tfrecords)

source_blob_name = 'test.tfrecords'

destination_file_name = './test.tfrecords'

download_public_file(bucket_name, source_blob_name, destination_file_name)Reference: https://cloud.google.com/storage/docs/access-public-data#code-samples

Access private Google storage bucket with permission

Create a service account from Google Cloud Shell

To allow Google account B (whoever you prefer including the project owner) to access private GCS storage bucket strategic-howl-305522 under Google account A, for example, we need to do the following setup:

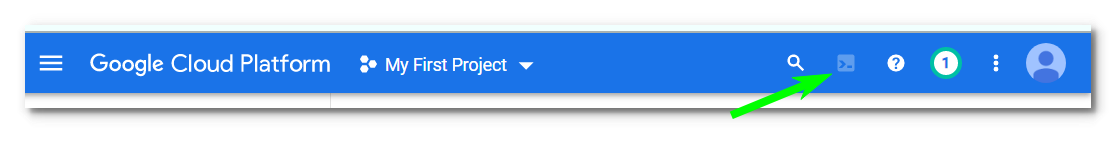

In the GCP console of Google account A, launch the Cloud Shell terminal.

To create a service account named ‘test-service-account’ (Note: only lowercase alphanumeric characters and hyphens are allowed) for the project strategic-howl-305522:

$ P='strategic-howl-305522' $ S='test-service-account' $ gcloud iam service-accounts create $SGrant permissions to the service account:

$ gcloud projects add-iam-policy-binding "$P" --member "serviceAccount:$S@$P.iam.gserviceaccount.com" --role "roles/owner"Generate the key file, in this case named test-colab.json:

$ K='test-colab.json' $ gcloud iam service-accounts keys create $K --iam-account "$S@$P.iam.gserviceaccount.com"Download the key file:

$ cloudshell download $KUpload the authentication token to Colab directory, in this case

/content/.Then run the following code to list all the blobs under the project

from google.cloud import storage storage_client = storage.Client() buckets = storage_client.list_buckets() print('-- List of buckets in project \"' + storage_client.project + '\"') for b in buckets: print(b.name)We can further list all the files insides a specific bucket, in this case bucket gcs-station-168:

bucket_name = 'gcs-station-168' bucket = storage_client.get_bucket(bucket_name) def list_files(bucketName): """List all files in GCP bucket.""" files = bucket.list_blobs(prefix=None) fileList = [file.name for file in files if '.' in file.name] return fileList list_files(bucket_name)

Reference: https://colab.research.google.com/drive/1LWhrqE2zLXqz30T0a0JqXnDPKweqd8ET#scrollTo=GBpnpPF4I8Wn