Transfer Kaggle Competition Data into Google Storage Buckets

Kaggle competitors may have the chances to migrate their competition data from Kaggle to Colab partially because they have run out of their monthly quota to use free GPU or TPU that is provided by Kaggle. In this post, I will walk you through the routine for transferring Kaggle data to Google Cloud Storage Bucket. I will also inform you of a possible setback during data migration when the data to migrate are originally organized in a nested file system on Kaggle.

Loading Competition Dataset

Prerequisites

- An Google Cloud Platform (GCP) account to access Google Cloud Console.

- Create a VM instance for this project from the Google Cloud Console.

- Navigate GCP sidebar to Storage and create a new bucket (free 5 GB-month Standard Storage as of March 1, 2021)

Task Outline:

- Create a new GCS Bucket.

- Upload Kaggle Titanic data to a GCS Bucket.

- Download your data from the GCS Bucket.

- Preview the data with Pandas profiling.

Dataset:

In Kaggle.com, navigate to

< > Notebooks, click+ New Notebook, and choose+ Add data.Select Competition Data, search for Titanic data (“Titanic - Machine Learning from Disaster”), and click

Add.The Titanic dataset would appear in the

/kaggle/inputdirectory as shown below:# /kaggle . ├── input │ └── titanic │ ├── gender_submission.csv │ ├── test.csv │ └── train.csv ├── lib │ └── kaggle │ └── gcp.py └── working └── __notebook_source__.ipynbimport os os.chdir('/kaggle/input/titanic') os.listdir()Output: ['train.csv', 'test.csv', 'gender_submission.csv']Note: We can write up to 20GB to the directory

/kaggle/working/that gets preserved outside of running sessions.

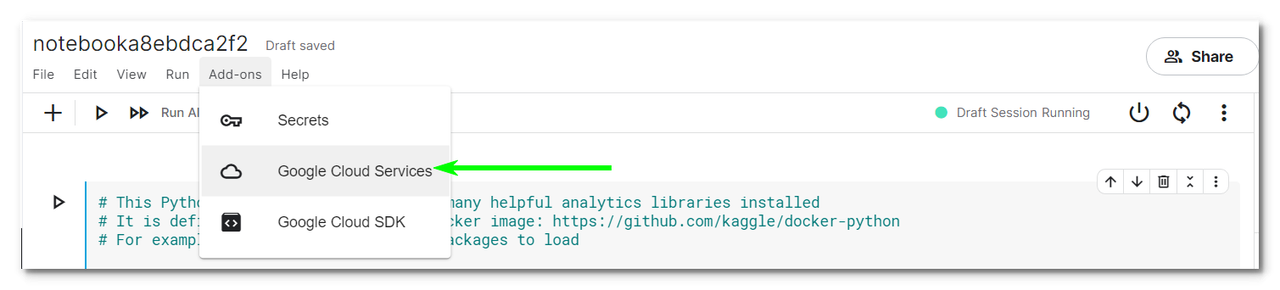

Google Cloud Services Intergration:

Grant Kaggle access to your Google Cloud Storage service.

To do this, select Google Cloud Services from the Add-ons menu.

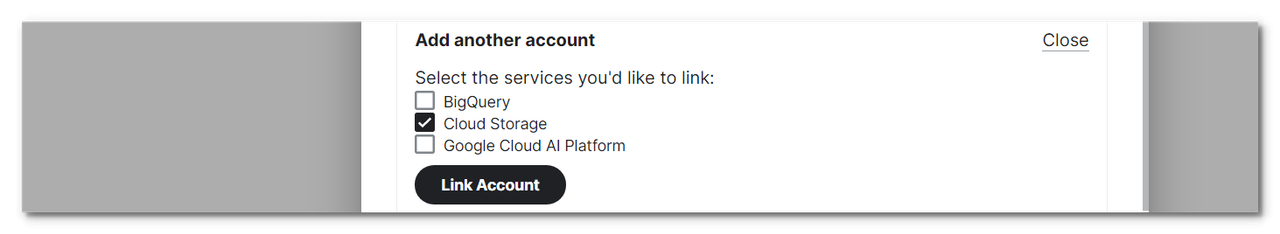

Tick Cloud Storage and then click the Link Account button.

Code:

Import modules

import os import pandas as pd import pandas_profiling as pp from google.cloud import storage PROJECT = 'strategic-howl-305522' bucket_name = 'titanic_train' storage_client = storage.Client(project=PROJECT)Create a GCS Bucket, if needed.

Firstly, import helper functions for data transfer:

# copy and paste all the helper function defined above. def create_bucket(bucket_name): bucket = storage_client.bucket(bucket_name) bucket.create(location='US') print(f'Bucket {bucket.name} created.') def upload_blob(bucket_name, source_file_name, destination_blob_name): """Uploads a file to the bucket. https://cloud.google.com/storage/docs/ """ bucket = storage_client.get_bucket(bucket_name) blob = bucket.blob(destination_blob_name) blob.upload_from_filename(source_file_name) print('File {} uploaded to {}.'.format( source_file_name, destination_blob_name)) def list_blobs(bucket_name): """Lists all the blobs in the bucket. https://cloud.google.com/storage/docs/""" blobs = storage_client.list_blobs(bucket_name) for blob in blobs: print(blob.name) def download_to_kaggle(bucket_name, destination_directory, file_name): """Takes the data from your GCS Bucket and puts it into the working directory of your Kaggle notebook""" os.makedirs(destination_directory, exist_ok = True) full_file_path = os.path.join(destination_directory, file_name) blobs = storage_client.list_blobs(bucket_name) for blob in blobs: blob.download_to_filename(full_file_path) def upload_files(bucket_name, source_folder): bucket = storage_client.get_bucket(bucket_name) for filename in os.listdir(source_folder): blob = bucket.blob(filename) blob.upload_from_filename(source_folder + filename)Secondly, create a bucket named

titanic_trainif it hasn’t been created before.try: create_bucket(bucket_name) except: passUpload to the GCS Bucket.

local_data = '/kaggle/input/titanic/train.csv' blob_name = 'train.csv' upload_blob( bucket_name, local_data, blob_name) # blob: file transferred from local print(f'Blob inside of {bucket_name} bucket:') list_blobs(bucket_name)Reversely, download the blob from the Bucket.

destination_dir = '/kaggle/working/titanic/' file_name = 'train_from_gcs.csv' download_to_kaggle( bucket_name, destination_dir, file_name)# output . ├── input │ └── titanic │ ├── gender_submission.csv │ ├── test.csv │ └── train.csv ├── lib │ └── kaggle │ └── gcp.py └── working ├── __notebook_source__.ipynb └── titanic └── train_from_gcs.csvPreview the data just downloaded.

os.listdir('/kaggle/working/titanic/')full_file_path = os.path.join(destination_dir, file_name) df_from_gcs = pd.read_csv(full_file_path) pp.ProfileReport(df_from_gcs)

Transferring Data from Nested Folders

In some Kaggle competitions, the given training/test datasets are organized in a nested folder system. How do we resolve this challenge when we transfer this kind of data to GCS Buckets? The answer is to make use of the walk() function of the os package. Here is the quick start guide for the data extraction step to get data ready for transfer from Kaggle to GCS.

Situation

Suppose our competition data folder is structured in the following order:

.

├── osic-pulmonary-fibrosis-progression

│ ├── test

│ │ ├── ID00419637202311204720264

│ │ │ ├── 8.dcm

│ │ │ └── 9.dcm

│ │ ├── ID00421637202311550012437

│ │ │ ├── 98.dcm

│ │ │ └── 99.dcmIn this case, the test folder is located in the root of the competition project directory osic-pulmonary-fibrosis-progression. The tricky part for loading test datasets is that individual .dcm files (e.g. 8.dcm, 9.dcm, and98.dcm) are stored in the folders which are two level deeper from the root project directory.

How do we resolve this challenge when we upload data organized in this style to a Google Storage Bucket?

Task

It turns out we can make use of the os package’s function **walk()**to walk through every subfolder iteratively and return names of directories and files it find on its traversal of the file system. Here is a quick example.

Simple example

We have such a file system in the default Kaggle root directory. The feature is that in any individual folder, you would find either directories or files, but not both.

/kaggle

.

├── input

├── lib

│ └── kaggle

│ └── gcp.py

└── working

└── __notebook_source__.ipynbRun this code in a new notebook cell:

import os

for root, folders, files in os.walk('/kaggle'):

print(f'root: {root}') # the absolute path of a root folder

print(f' folders: {folders}') # the list of subfolders in this root folder

print(f' files: {files}') # the list of files in this root folderThe three returns of the function os.walk() reveals the content of the file system inside out:

root: /kaggle

folders: ['lib', 'input', 'working']

files: []

root: /kaggle/lib

folders: ['kaggle']

files: []

root: /kaggle/lib/kaggle

folders: []

files: ['gcp.py']

root: /kaggle/input

folders: []

files: []

root: /kaggle/working

folders: []

files: ['__notebook_source__.ipynb']Advanced Example: Mix of folders and directories

Suppose we have the following file structure: Two folders, folder_a and folder_b, are located in the root directory test. Inside the latter we have two more subfolders, sub_c and sub_d.

Run the code below in a new notebook cell to generate our toy file system:

!mkdir /toy_system

%cd /toy_system

!mkdir folder_a

!mkdir folder_b

%cd /toy_system/folder_a

!touch a1.txt

!touch a2.txt

%cd /toy_system//folder_b

!touch b1.txt

!touch b2.txt

!mkdir sub_c

!mkdir sub_d

%cd sub_c

!touch c1.txtThe toy file system is structured in such a style:

/toy_system

.

├── folder_a

│ ├── a1.txt

│ └── a2.txt

└── folder_b

├── b1.txt

├── b2.txt

├── sub_c

│ └── c1.txt

└── sub_d

4 directories, 5 filesos.walk() traverses the whole file structure and reveals its content folder by folder:

import os

for root, folders, files in os.walk("/toy_system"):

print(f'root: {root}') # the full path of a root

print(f'basename: {os.path.basename(root)}') # the basename of a root

path = root.split(os.sep)

print(f'path: {path}')

print(f'Content:')

print(f' folders: {folders}')

print(f' files : {files}\n')The preceding code outputs the file structure as follows:

root: /toy_system

basename: toy_system

path: ['', 'toy_system']

Content:

folders: ['folder_a', 'folder_b']

files : []

root: /toy_system/folder_a

basename: folder_a

path: ['', 'toy_system', 'folder_a']

Content:

folders: []

files : ['a1.txt', 'a2.txt']

root: /toy_system/folder_b

basename: folder_b

path: ['', 'toy_system', 'folder_b']

Content:

folders: ['sub_d', 'sub_c']

files : ['b2.txt', 'b1.txt']

root: /toy_system/folder_b/sub_d

basename: sub_d

path: ['', 'toy_system', 'folder_b', 'sub_d']

Content:

folders: []

files : []

root: /toy_system/folder_b/sub_c

basename: sub_c

path: ['', 'toy_system', 'folder_b', 'sub_c']

Content:

folders: []

files : ['c1.txt']It turns out that to get the list of absolute path for each files (excluding directories), we simply run:

for root, folders, files in os.walk('/toy_system'):

if files:

for file in files:

print(os.path.join(root, file))Output is what we expect:

/toy_system/folder_a/a1.txt

/toy_system/folder_a/a2.txt

/toy_system/folder_b/b2.txt

/toy_system/folder_b/b1.txt

/toy_system/folder_b/sub_c/c1.txtReferences

| [1] | How to move data from Kaggle to GCS and back by Paul Mooney |

| [2] | Google Cloud Client Libraries for google-cloud-storage API doc |

Appendix

I. Change directories

%cd ~/path-to-your-file-at-home-drecitory

!ls -lashDon’t put exclamation mark as you do in a Google Colab notebook, and you need to make them into one line. Otherwise, the directory won’t change.

II. Internet connectivity

Downloading with Internet connection

To download external data set or custom Python packages into your Kaggle environment using commands such as !wget or !pip install, turn it on from the settings menu of the kernel editor.

Downloading without Internet connection

(Todo) How to install without connection in Kaggle environment